The Ultimate Prompt Engineering Guide for 2026: From Basics to Agentic Workflows

This comprehensive prompt engineering guide covers everything from basic chain-of-thought to building autonomous agentic workflows in 2026.

If you are still typing “Write me a blog post about X” into ChatGPT and expecting magic, you are living in 2023.

In 2026, Prompt Engineering is no longer just about writing clever sentences. It has evolved into Agentic Engineering - the art of orchestrating autonomous AI systems to perform complex, multi-step workflows. As a developer who has transitioned from simple chatbots to building complex cognitive architectures, I’ve realized that a static tutorial isn’t enough; you need a dynamic, modern framework to stay ahead.

I’ve seen the shift firsthand. As developers, we’ve moved from “chatting” with bots to building cognitive architectures that can reason, plan, and execute. This hasn’t been an overnight change, but a steady evolution that requires a new kind of mindset - one that focuses on systems, not just syntax.

Here is your essential prompt engineering guide for mastering this skill in 2026, moving from the fundamentals to the cutting edge of agentic workflows.

The Evolution: Why You Need A New Approach

Three years ago, the “magic” was getting an AI to write a poem. Today, the bar is infinitely higher. We need AI to debug distributed systems, plan marketing campaigns based on real-time data, and refactor legacy codebases while adhering to strict style guides. A simple prompt fails at these tasks. You need structure. You need context. You need a system.

Many resources online are outdated. They focus on “hacks” that no longer work with models like GPT-5.2 or Claude Opus 4.5. This article focuses on the timeless principles and the new agentic patterns that define professional AI development in 2026.

Level 1: The Fundamentals

Before we build agents, you must master the building blocks. These principles haven’t changed, but many developers still ignore them. Any effective strategy must start here.

1. The Persona Pattern

Don’t just ask for a solution. Define the expert. Adopting a specific persona drastically changes the output quality.

- Bad: “Fix this code.”

- Good: “You are a Senior Security Engineer auditing a Node.js express application. Review the following authentication middleware for common vulnerabilities (referencing OWASP Top 10 for LLM Applications 2025, specifically ‘LLM01: Prompt Injection’ and ‘LLM06: Excessive Agency’) and rewrite it to handle session fixation and brute-force attacks securely.”

By setting the persona, you are essentially narrowing the search space of the model’s weights. This is a core tenet of clear communication with LLMs.

2. Context Caching and Management

In 2026, context windows are massive (millions of tokens), but they aren’t infinite - and they cost money. A modern prompt engineering guide must address Context Caching.

We now use Context Caching features (like XC-Cache architectures common in 2025) to “pin” heavy documentation (API specs, brand guides) so we don’t re-compute embeddings every time. We recommend structured prompts where static context (who you are, what the project is) is separated from dynamic context (the specific bug).

- Action: Structure your prompts so static context is separated. Keep your system prompts clean and distinct from user inputs to avoid confusion.

3. Zero-Shot, One-Shot, and Few-Shot Prompting

No technical guide would be complete without explaining these concepts.

- Zero-Shot: Asking without examples. “Translate this.”

- One-Shot: Providing one example.

- Few-Shot: Providing multiple examples.

In 2026, few-shot prompting is still king for consistent formatting. We highly recommend adding 3-5 examples for any structured data extraction task.

Level 2: Advanced Reasoning

This is where we separate the amateurs from the engineers. This section focuses on forcing the model to “think” before it speaks.

AI models are probabilistic engines. If you ask a complex question directly, they often guess. To mitigate this, we force them to utilize reasoning strategies.

Chain of Thought (CoT)

You explicitly ask the model to plan before it acts. As emphasized throughout this prompt engineering guide, reasoning is the bridge between a vague request and a precise output.

“Let’s approach this debugging request step-by-step. First, analyze the stack trace to identify the exact line of failure. Second, hypothesize three potential causes based on the recent commit history. Third, propose a fix for the most likely cause. Finally, generate the corrected code block.”

By forcing the model to output its reasoning tokens first, you give it “time to think,” significantly reducing hallucinations. This technique is a staple in every advanced workflow.

Tree of Thoughts (ToT)

For critical decision-making, we ask the model to generate three possible solutions, evaluate the pros/cons of each, and then select the best one. This mimics human brainstorming. A robust strategy suggests using ToT for architectural decisions or complex debugging scenarios where a single path might lead to a dead end.

Generated Knowledge Prompting

Sometimes the model has the knowledge but needs to be “primed.” We suggest asking the model to first generate relevant facts about a topic before answering the question. This two-step process often yields higher accuracy than a direct query.

Level 3: The 2026 Standard - Agentic Workflows

This is the frontier. We are no longer just prompting; we are building Systems. We transition now from simple text generation to autonomous action.

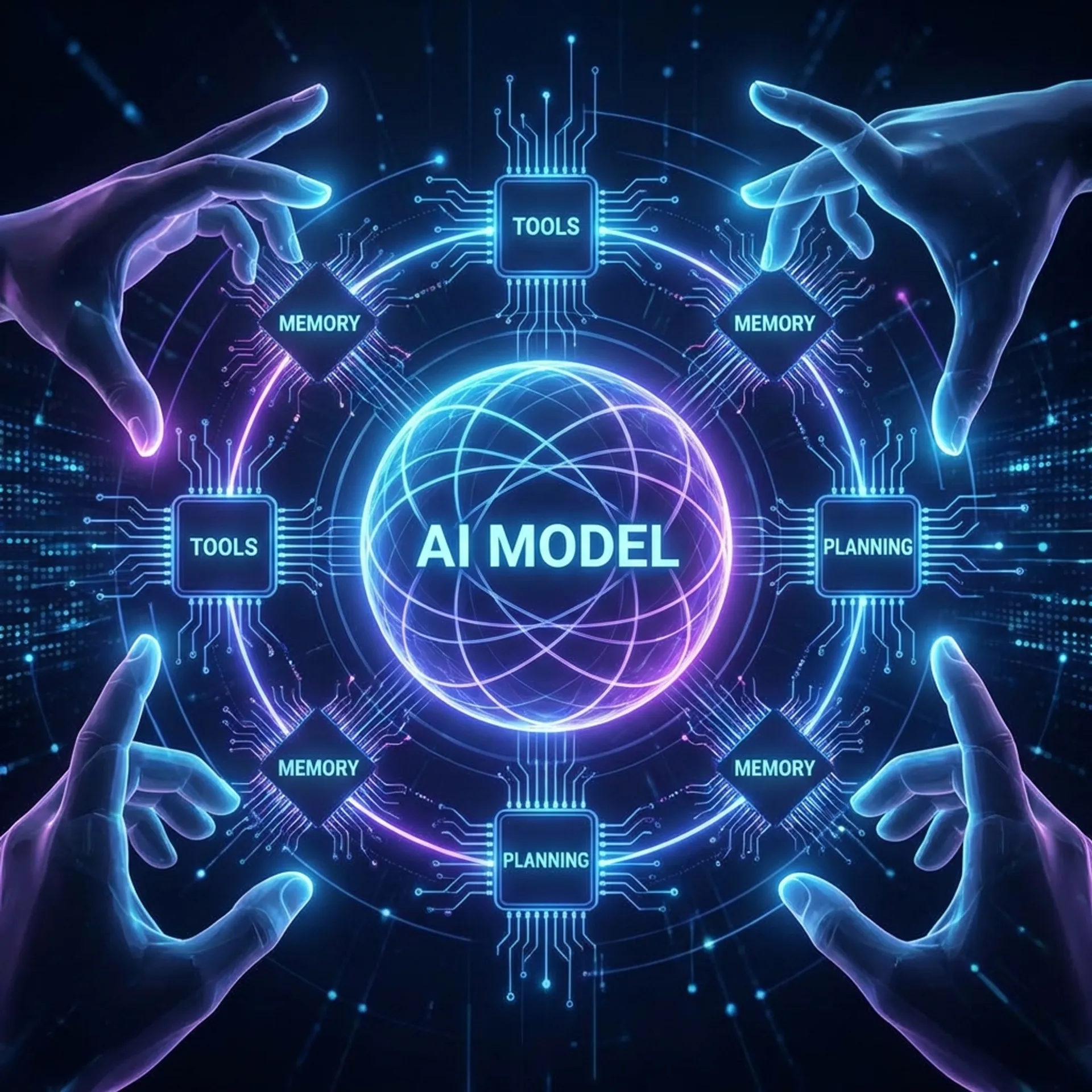

An agent isn’t just a prompt; it’s a loop.

- Perceive: The AI reads the state (e.g., a GitHub issue).

- Reason: It plans a solution (using CoT).

- Act: It uses Tools (runs a script, queries a database, calls an API).

- Reflect: It looks at the output. Did it work? If not, it self-corrects.

The Shift to “Orchestration”

As a reader of this guide, you need to understand that your job isn’t to write the perfect poem. It’s to design the Cognitive Architecture of an agent.

- What tools does it need access to?

- What are its guardrails?

- How does it handle failure?

For example, a standard tutorial might tell you how to summarize news. But an agentic engineer builds an agent that:

- Fetches the top 10 RSS feeds (Tool:

fetch_rss). - Filters for relevance using a specialized prompt.

- Synthesizes a report.

- Posts it to Slack (Tool:

slack_api).

This is Agentic Engineering, and it is the inevitable conclusion of our journey.

Level 4: Tools of the Trade

To follow these best practices effectively, you need the right tools. In 2026, we don’t just paste into a chat box.

- Prompt Management Systems (CMS): treating prompts as code, version controlling them.

- Evaluation Frameworks: You cannot improve what you cannot measure. We insist on using “evals” - automated tests that check if your prompt outputs match expected results.

- Playgrounds: specialized environments for testing model temperature, top-p, and frequency penalties.

Professional development in this space always emphasizes tooling. If you are manually copying and pasting interactions to test them, you are likely working inefficiently.

Common Mistakes to Avoid

Even with the best resources, developers make mistakes. Here are a few to watch out for:

- Over-Complicating: Sometimes a simple instruction is better. Avoid adding too much fluff.

- Ignoring Token Costs: Large contexts are expensive. Be concise with your context data.

- Neglecting Security: Prompt Injection is real. You need “sanitization” layers before your input hits the LLM.

- Over-Reliance on Zero-Shot: While impressive, zero-shot prompting often lacks reliability for production systems. Don’t be afraid to provide examples (few-shot) or clear constraints to guide the model. It’s not “cheating”; it’s engineering.

Building Your Own Internal Guide

Every organization needs its own internal documentation. Why? Because your domain context is unique.

- Document your patterns: What works for your specific API?

- Share examples: Create a library of “gold standard” examples.

- Iterate: Documentation must be living.

By creating a specialized knowledge base for your team, you scale the knowledge of your best engineers across the entire company.

Practical Advice for 2026

If you want to stay relevant, stop treating AI like a chatbot and start treating it like a programmable logic engine:

- Learn Tool Use: Understand how to define functions that an LLM can call.

- Master Evaluation: How do you know your prompt is good? Run automated evaluations against a test set.

- Think in Workflows: Don’t ask “What prompt solves this?” Ask “What sequence of steps solves this?”

Conclusion

The title “Prompt Engineer” might disappear, but the skill of AI Orchestration is here to stay. Whether you are writing code or managing a business, the ability to translate human intent into reliable machine action is the most valuable skill of the decade.

This prompt engineering guide has covered the journey from basic persona patterns to complex, self-healing agentic workflows. It is more than just text; it is a blueprint for the future of work.

Start looking at your workflow today. Where can you insert an agent, not just a prompt? Use this article as your reference. Revisit it when you are stuck. Share it with your team to align on best practices.

The future is agentic, and you are ready to build it.

Want to understand the foundations of logic before building agents? Read my guide on What DSA Actually Is. Worried about your career in this new era? Check out the Future of Programmers.